![NLP Tutorial [History, Working, Implements, Components, Libraries]](https://techsore.com/wp-content/uploads/2019/09/NLP-Tutorial-History-Working-Implements-Components-Libraries-1.jpg)

NLP Tutorial [History, Working, Implements, Components, Libraries]

NLP tutorial is great for beginners who want to start their career in computer programming. It is a branch of data science that consists of an orderly procedure for analyzing, understanding, and receive knowledge from the text data in a smart and efficient manner. Natural Language Processing is also a part of Artificial Intelligent that helps a computer to know and explain manipulate human language.

It helps developers to organize and structural information to performance steps like translation, relationship extraction, speech identification, topic segmentation, etc. This creates models in a Python programming language. NLP is a path of computers to analyze, understand and get meaning from a human language such as English, Spanish, Hindi, etc.

The development of NLP use is challenging because computers traditionally needed humans to speak to those in a programming language. Which is good in a programming language. This is a basic NLP tutorial for beginners to expert.

Table of Contents.

- History of NLP

- How Does NLP Work?

- Components of NLP

- Important Libraries For NLP Python

- NLP Writing System

- How To Implement NLP?

- NLP Examples

- Future of NLP

- Natural Language Vs Computer Language

- Techniques of NLP

- Benefits of NLP

- Disadvantage of NLP

- Conclusion

History of NLP

Here are the fateful events in the history of NLP.

| 1950 | NLP is started when Alan Turing published a document called “Machine and Intelligence.” |

| 1950 | This is attempts to automation translation between Russian, English. |

| 1960 | Work of Chomsky others on formal language theory, generative syntax. |

| 1990 | That is probabilistic,data-driven models had happened quite standard. |

| 2000 | A large amount of spoken and textual data become available. |

How Does NLP Work?

Before you learn how NLP tutorial works. Let’s understand how humans use language.

So every day we tell thousand of a word which other people interpret to do countless things. We consider this is easy communication, but we all aware its words run much deeper than that. There is always some context so that we receive from what we tell and how we tell that. NLP never focuses on a sound swing; it does draw on the contextual model.

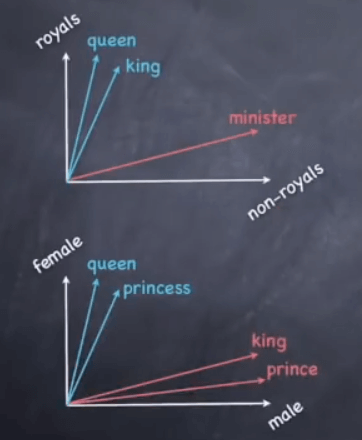

- Boy to girl as prince is to __________?

- Meaning (prince ) – meaning (boy) + meaning ( girl)=?

- The answer is- princess

Here, you can simply co-relate because a boy is male gender and girl is female gender. And in the same way, a prince is masculine gender, and its gender is a princess.

- Is the prince to prince as the princess is to_______?

- The answer is— princess

Here, we can see two words prince and princes where one is singular and other is plural. Therefore, when the world princess comes, that automatically co-relates by princess again singular plural.

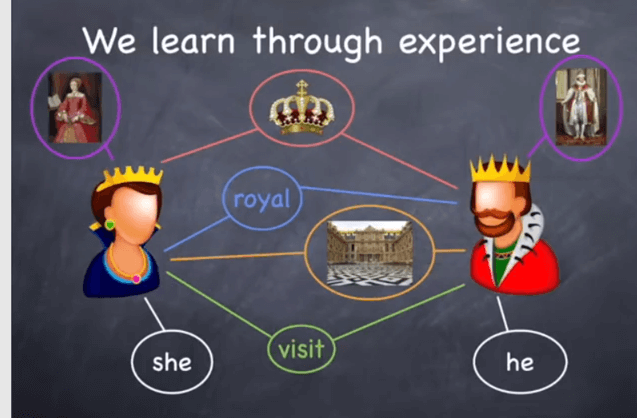

So here the largest question is how do we understand what words mean? Let’s say who calls it queen?

The answer is we learn this thinks experience. However, here the main question is how a computer knows about the same?. We need to give enough data for a machine to learn by experience. We can feed details as.

Some feed details

- The princessMother.

- The princess is generous.

- Her Majesty the princess.

- The crown of princess Elizabeth.

- The princess’s speech during a state visit.

By the above examples, the machine understands the entity princess. The machine makes word vectors as under. A word vector is built using surrounding words.

A machine makes these vectors.

- As that learns from many databases

- Use Machine Learning algorithms.

- A word vector is make using surrounding words.

Here is a formula.

- Meaning prince-meaning boy+meaning girl=?

- This amounts to performing easy algebraic operations on word vectors.

- Vector prince= vector man+vector girl?

- To which a machine reply is a princess.

Components of NLP

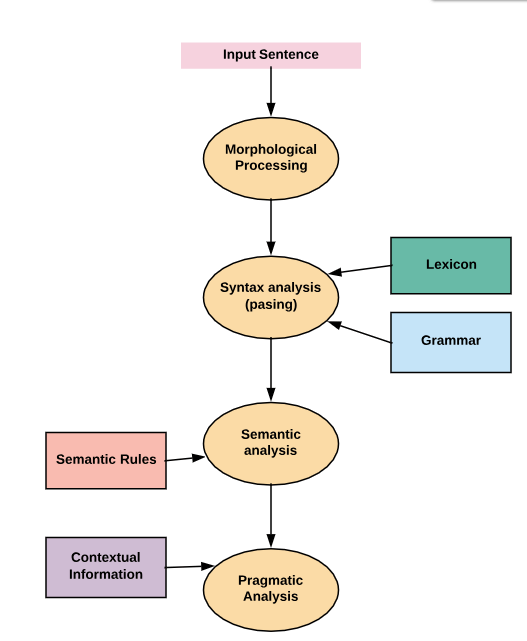

There are five main components of NLP.

- Morphological and Lexical Analysis

- Syntactic Analysis

- Semantic Analysis

- Discourse Integration

- Pragmatic Analysis

Morphological and Lexical Analysis

Lexical analysis is a vocabulary It includes words and expressions. That depicts analyzing, identifying and description of the structure. It reckons in dividing a text into paragraphs, words, and sentences.

Individual words are analyzed into their components and no words token such as punctuation are separated from the words.

Syntactic Analysis

Syntactic analysis is the third phase of Natural Processing Language. And the objective of that stage is to draw exactly sense, or you can say vocabulary sense from the word. Syntax analysis checks text for meaningfulness and comparing rules of formal grammar.

For example, a sentence like “hot ice-cream” would be rejected with a semantic analyzer.

In this sense, a syntactic analysis may be described as a procedure of analyzing strings of sign in natural language conforming to rules of formal grammar.

Semantic Analysis

Semantic Analysis is structure and develops with a syntactic analyzer that assigns meanings. It components shifting linear sequences of words into structures. In order that shows how a word is allied with each other.

Semantic focuses only on the literal sense of words, phrases, and sentences. It only abstracts phrase meaning or real sense by provided context. Structures fixed by the Syntactic analyzer always have some assigned meaning.

E.g “colorless green tip.” That would be rejected by Symantec analysis as colorless Here; green does not create any sense.

Discourse Integration

Discourse integration is considered as the more context for any small part of Natural Language structure. Natural Language is so complicated and, most of the time, a series of texts are dependent on prior discourse.

That concept occurs often in pragmatic ambiguity. Discourse Integration means a sense of the context. sense of any single sentence that depends on this sentence. That is also considered the meaning of the following sentence.

E.g word “that” in a sentence “He wanted that” depends on prior discourse context.

Pragmatic Analysis

This is a deal with from outside and word knowledge, which means knowledge that is external by paper queries. Pragmatics Analysis this is focused on what was defined is reinterpreted with what that actually meant. Deriving different aspects of language that needed real-world knowledge.

Moreover, it develops communicative and social content and these impact on interpretation. And Pragmatic analysis helps users to search intended impact by applying a set of rules that characterize cooperative dialogues.

E.g “close gate” should be interpreted as a request instead of an order.

Important Libraries For NLP Python

- Scikit-learn is Machine learning in Python

- NLTK is a complete toolkit for all NLP techniques.

- Pattern A web mining module for tools for NLP and machine learning.

- TextBlob simple to use NLP tools API, make on top of NLTK and Pattern.

- SpaCy Industrial strength NLP with Python and Cython.

- Gensim subject Modelling for Humans.

- Stanford Core NLP services and packages from Stanford NLP Group.

NLP Writing System

The mode of the writing system used for a language is one of the deciding reasons for determining the best approach for text pre-processing.

- Logographic: This is a large number of individual signs represents words. E.g Japanese, Mandarin.

- Syllabic: Individual sign represents syllables.

- Alphabetic: Individual signs represent noise.

The majority of the writing system can use the Syllabic or Alphabetic system. Even English, with its relatively easy writing system based on the Roman Alphabet. Use a logographic sign which includes Arabic numerals, Currency sign(S$). and other special signs.

It poses the following challenges.

It is extraction meaning from a text is challenging

NLP tutorial is dependant upon the quality of the corpus. If a domain is vast So that is hard to understand the context. This is a dependence on the character set and language.

How To Implement NLP?

Under, provide are popular ways used for NLP

Machine Learning: learning NLP procedures used during ML. That automatic focus on the most general causes. So when we write rules with hand, it is often not right on all care about human errors.

Statistical Interface: It can create the use of Statistical Interface algorithms. That helps you to produce models that are robust, E.g containing word structures that are known to all.

NLP Examples

Today NLP technology is a widely used technology. So here are a general application of NLP.

Here are some examples of the NLP tutorial.

Information retrieval & Web Search

Google, Yahoo, Bing, and others find engines foundation their machine translation processing on NLP deep learning models. That allows an algorithm to read text on a webpage, interpret its meaning and translate it to another language.

Grammar correction

That technique is widely used with word processor software like MS word for spelling correction and grammar check.

Question Answering

Type in keyboard and ask questions in the NLP.

Text Summarization

process of summarising important data from a source to produce a shortened version.

Machine Translation

Use of the computer application to translate text or speech from one natural language to another.

Sentiment Analysis

That helps the companies to analyze a large number of reviews on a product. This also allows their customers to provide a review of the particular product.

Future of NLP

- Human readable natural language technology is largest Al- problem. That is almost the same as resolving central artificial intelligence cases and developing computers as intelligent as people.

- Future computers or machines with the help of NLP will able to learn from data online and apply this in the real world, however, lots of work required in this regard.

- NLP toolkit or NLTK be more effective. It toolkit good for the Future of NLP.

- Complex with NLP generation, computers will become more capable of receiving and providing useful and resourceful information or data.

Natural Language Vs Computer Language

| Parameter | Natural Language | Computer Languages |

| Ambiguous | It is Ambiguous in nature. | It is designed to unambiguous. |

| Redundancy | NL employs lots of redundancy. | Redundant less is formal language. |

| Literalness | NL is making of idiom and metaphor. | That is formal languages mean exactly what they want to tell. |

Techniques of NLP

Named Entity Recognition

The most basic techniques and useful in NLP extracting ion the text. This features the fundamental concepts and references in the text. NER identifies the entries such as a people, location company, dates, etc. so that is NER techniques.

Aspect Mining

Aspect mining knows the different aspects in the text When used in conjunction with the sentiment analysis, that exact complete knowledge from the text. This is one of the simplest methods of aspect mining is using a part of speech tagging.

- Aspects and Sentiment

- customer service

- call center negative

- Agent negative

- Pricing/Premium positive.

Topic Modeling

This is the extra complicated way to identify natural topics in the text. A prime benefit of topic modeling is an unsupervised technique. Model training and a labeled training dataset are not needed. There are a few algorithms for topic modeling.

- Latent Semantic Analysis(LSA)

- Latent Dirichlet Allocation(LDA)

- Correlated Topic Model(CTM)

Benefits of NLP

- High accuracy and capacity of documentation.

- Ability to automatically create a readable sum text.

- Useful for own assistants such as Alexa.

- It allows a company to use chatbots for customer support.

- Simple to perform sentiment analysis.

- Users can ask questions about any topic and get a direct answer within seconds.

- NLP system gives answers to questions in natural language.

- NLP system provides exact answers to questions, no redundant or unwanted knowledge.

- The purity of answers growth by an amount of relevant knowledge gives in question.

- NLP procedure helps computers communicate by humans in their speech and machine other language-related tasks.

- It allows you to execution extra language-based data compares to a human being without stress and in an unbiased and consistent way.

- Structuring a highly unstructured data origin. And also NLP tutorial uses in Data science for deep learning.

Disadvantages of NLP

- Complex Query Language system may not be able to give the right answer that question is a bad word or unclear.

- The system is made for a single and specific task only, that is incapable to adapt to new domains and problems so long as of limited functions.

- NLP system does not have a user interface that lacks features this allows users to further interact with the system.

Conclusion

I hope this tutorial will help you maximize your capacity and when starting by NLP in Python and Data Science. We sure this not only provide you any tip about basic techniques. But that also showed you how to implement os some more sophisticated techniques available today. Syntactic analysis and semantic analysis are the techniques used to complete NLP tutorial tasks.

This is precise, unambiguous and highly structured, or via a limited number of clearly installed sound commands. Human speech, is not always precise that is often ambiguous and the linguistic structure can be dependent on huge complicated variables, including slang, regional dialects, and community context.

That’s It.